The 9 Best AI Customer Service Software in 2026

Years ago, managing a support team meant living in a loop of repetitive questions: “How do I reset my password?” or “Do you have a discount?” Day after day, my team tackled the same inquiries manually. It wasn’t fun, and it certainly wasn’t efficient.

Then, rule-based chatbots came along. It was a game-changer — until it wasn’t. Often, they only served to frustrate customers by keeping them in a loop of providing the wrong answer and refusing to send them to a human customer support agent.

Modern AI customer support software is making those challenges a thing of the past. Today’s customer service AI can handle those repetitive queries. They also boost agent productivity by automatically summarizing conversations and ensuring polished grammar. They can even create FAQ pages, analyze customer sentiment, extract actionable insights, and more.

In this guide, we’ll take a look at how today’s AI systems are different, explore some of the ways they can help you deliver better and faster customer support, and introduce you to the nine best AI customer service software options.

Overview of the 9 best AI customer service software

Get a quick summary of our picks for the best AI customer service software below. If you want more information about any of the tools, we share our detailed reviews of each platform later in this article.

Help Scout is best for teams wanting to gradually introduce AI to assist human agents. Its AI features can answer frequently asked questions automatically, draft and edit replies for your team, summarize long conversation threads, and translate replies into other languages.

Brainfish is a unique option because it can automatically create and maintain an entire help center from scratch by learning from customer interactions and documentation.

Zendesk stands out by offering guided knowledge base optimization, where its AI identifies content gaps and then helps you fill them by generating full articles from a few user-provided bullet points.

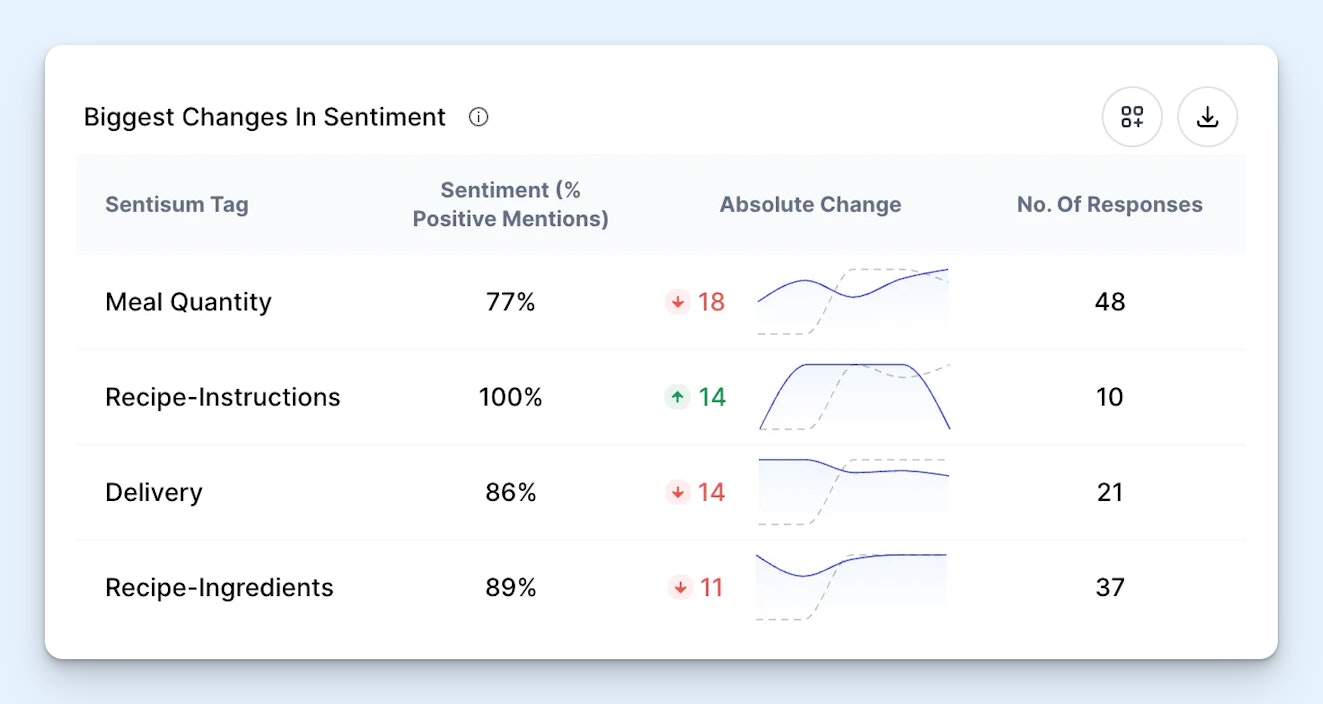

SentiSum acts as an analytics add-on that automatically tags support tickets and analyzes customer feedback, making it ideal for understanding customer sentiment and identifying recurring issues at scale.

Intercom is a great choice for performance analytics because its AI can analyze conversation sentiment to provide satisfaction scores and alert managers to potentially unhelpful agent replies, creating training opportunities.

Balto is designed specifically for call centers. It listens to live phone conversations to provide agents with real-time assistance like scripts and talking points while also helping supervisors with quality assurance.

Salesforce Service Cloud is an enterprise-focused platform that features the most advanced AI of all of the help desks on this list. It also ensures conversations are highly secure through its Einstein Trust Layer.

Tidio is perfect for AI skeptics as it allows you to monitor AI conversations with customers in real time and intervene if needed. When you do need to intervene, the AI learns from your corrections to improve future responses.

Productboard specializes in managing customer feedback by using AI to automatically summarize customer pain points from various sources and link them directly to relevant product features in your roadmap.

What is AI customer service software?

AI customer service software is a tool that leverages technologies like machine learning, natural language processing, and generative AI to automate a wide variety of tasks, such as answering customer questions, routing requests, detecting sentiment, identifying gaps in knowledge base content, and more. This improves both agent productivity and the overall customer experience.

How does AI customer service software work?

AI customer service software uses machine learning to continuously improve and adapt based on data. Most are trained on your knowledge base content and responses to customer support inquiries to increase the likelihood that the answers they provide are correct. They may also use feedback to continually refine the actions they take and responses they provide.

AI support systems also use natural language processing to understand requests in a way that’s similar to a human. Instead of triggering replies based on specific keywords or relying on answers provided in intake forms, they can extract the meaning from a query written in natural language and reply in a conversational way, such as asking follow-up questions when needed.

Take chatbots, for example. When a customer reaches out with a question, an AI-powered chatbot can use natural language processing to understand their question and generative AI to retrieve relevant information from the knowledge base, finally providing an AI-generated response that feels natural and conversational.

What can modern AI customer service platforms do?

There are several ways that AI customer service systems help teams deliver better, faster support:

Reply to customer inquiries: AI ticketing systems can automatically reply to inquiries received across a variety of channels — email, chat, social, and even phone. Because they’re trained on your data — knowledge base articles, replies to historical requests, your website, and more — the answers they provide are accurate.

Write draft replies to requests: If you don’t want to fully hand the reins off to AI to do support for you, AI ticketing systems can also draft replies for you. Agents can then review those drafts, hit send if they’re correct, or edit them if they’re not quite right. This can save your team a lot of time and help you close support tickets more quickly.

Update information in other systems: If you connect your ticketing software to other tools you use — such as your ecommerce platform, billing system, or CRM — AI agents can also perform simple system actions like canceling orders, providing tracking information, issuing refunds, or updating customer data.

Perform translations and edit replies: AI can translate customer inquiries from one language into another so your agents can understand them and then translate their replies back into the original language so the customer can understand it. They can also help you copyedit replies, adjust tone and sentiment, and shorten or expand responses.

Summarize information: AI can write summaries of long, back-and-forth exchanges and attach those summaries to support tickets so that agents picking up a complicated ticket can get up to speed quickly without having to read through the entire conversation history.

Route, prioritize, and escalate tickets: AI can automatically route support tickets to the right team/agent based on things like skillsets, availability, and subject-matter expertise. It can also perform sentiment analysis, identify customer attributes, and analyze request details to prioritize, categorize, and/or escalate tickets.

Gather insights, identify trends, and uncover issues: Some AI ticketing systems come with reporting features that do things like measure customer satisfaction even when a CSAT survey wasn’t completed, identify agents that are providing unhelpful or unclear responses, and uncover self-service articles that are outdated or incorrect.

Create new knowledge base articles: Some AI ticketing systems have features that let you instantly turn a customer support conversation into a knowledge base article. Others let you provide an outline or a few bullet points, then they transform that input into a complete knowledge base article instantly.

Help your agents reply to inquiries: Many AI ticketing systems come with copilots that provide your agents with assistance while they’re doing their work, such as recommending relevant help center articles, suggesting saved replies that could be used, or surfacing recently closed requests that might contain the information they need.

What are the benefits of using AI customer service software?

The capabilities of AI support platforms that we covered above deliver four major benefits to customer support teams:

Faster response times: AI support software improves response times by automatically answering questions, suggesting answers for reps, offering writing assistance, summarizing conversations, and getting requests to the right team/agent immediately.

Less strain on human reps: Repetitive tasks like sorting and assigning tickets, providing order updates, and answering FAQs can be a drain on human agents. Using AI makes agents’ jobs more enjoyable and gives them more time to focus on the types of work that only a human can do.

Higher customer satisfaction: Faster response times and access to more robust self-service tools like AI chatbots can improve the overall customer experience of interacting with support, leading to more satisfied customers.

Reduced operational costs: By automating repetitive tasks and responding to simple customer issues across multiple channels, you can deliver high-quality customer service with fewer support agents. This can lead to significant cost savings.

The 9 best AI customer service software

Below, you’ll find our reviews of the nine best AI customer service software. We’ve included many different types of tools — help desks, chatbots, knowledge base solutions, QA tools, and sentiment analysis platforms — so you can find the perfect solution for your team’s needs.

1. Help Scout – Best for gradual AI adoption

If your goal for adopting an AI ticketing system is to automate everything and replace your entire support team with AI agents, Help Scout isn’t the right solution for you. However, if you want to gradually add AI into your support mix as a means of improving the customer experience and creating opportunities for your agents to do more engaging and fulfilling work, it’s perfect.

When you sign up for Help Scout, you get access to email, self-service, chat, and social support — all managed in a shared inbox. You can build private and public knowledge bases, serve proactive messages to app users and website visitors, integrate with Shopify, conduct NPS and CSAT surveys, and much more.

Help Scout’s AI features are trained on your data — help center articles, previous replies to customers, and even your websites — to ensure that anything it produces is both accurate and on brand:

AI Summarize condenses lengthy back-and-forth conversations into concise bullet points with just a click. This eliminates the need for time-consuming manual reviews, allowing agents to quickly get up to speed on ongoing issues.

AI Assist gives your agents the tools to fine-tune both replies to customers and knowledge base articles. Use it to adjust the tone of a message, modify its length, fix spelling and grammar, or translate it into another language.

AI Drafts leverages generative AI, past support conversations, and your knowledge base articles to draft responses to customer questions. Unlike autonomous bots that can risk sending inaccurate responses, agents can review, edit, and personalize drafts before sending.

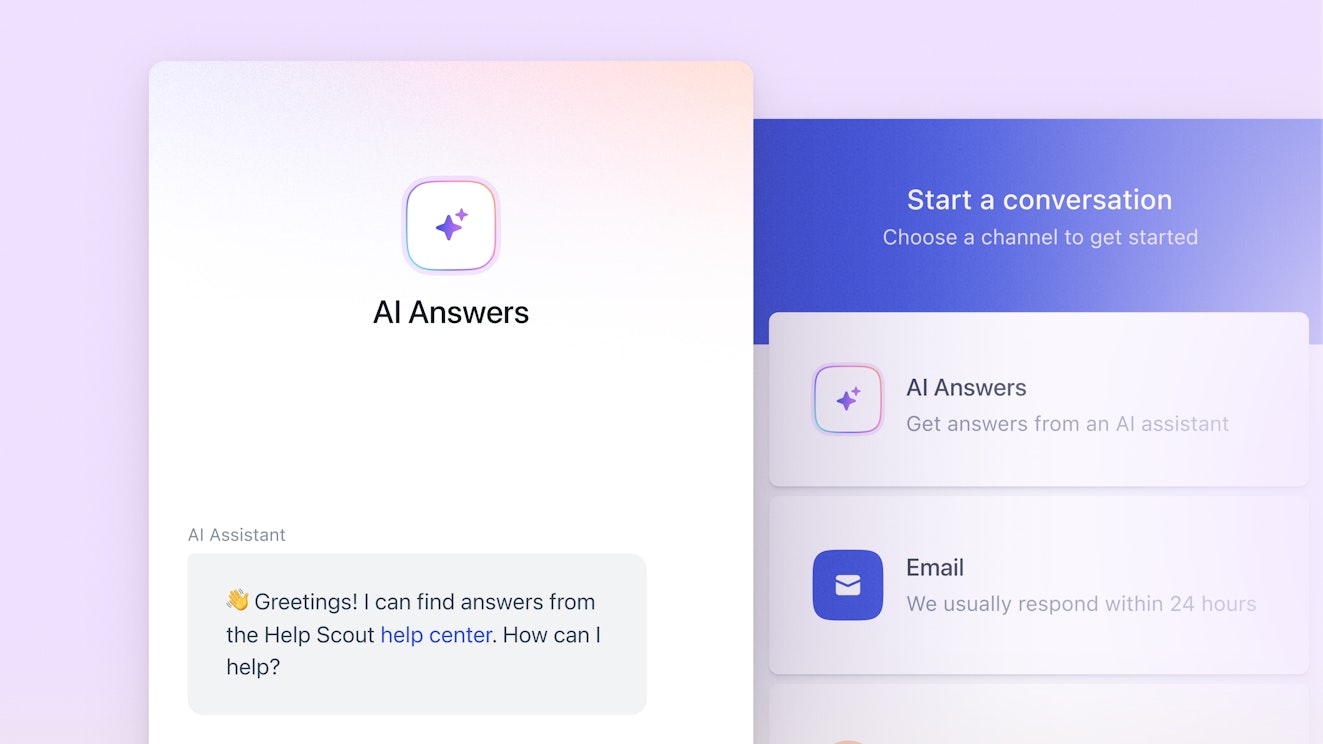

AI Answers is designed to provide clear, simple responses to straightforward customer inquiries. Customers will know that they’re interacting with AI, setting realistic expectations. And if the AI response doesn’t fully resolve their issue, switching to a human agent for further support is easy.

If you’re looking for AI customer service software that empowers your team to deliver exceptional, customer-centric service, Help Scout is the perfect solution.

Pricing

Free plan and trial available. View Help Scout’s current pricing.

Learn more about Help Scout:

2. Brainfish – Best for creating a fully automated help center

If you don’t already have a help center — or if your team is struggling to keep your existing help center updated — Brainfish is a good option to consider. It can create a fully functional help center for you from scratch based on customer interactions, and it also updates outdated knowledge base articles for you automatically.

Brainfish integrates with a number of systems — your product, help desk, CMS, and website — and uses the data it collects to learn what to add to, update, and remove from your knowledge base.

For example, if it notices that users commonly have challenges with a specific feature of your product, it uses that information — and any related support responses or other documentation — to write a help center article for you.

Once your knowledge base is up and running, Brainfish can be used as a chatbot that provides your customers with instant answers to their questions. It can also provide "nudges" that proactively recommend helpful content when it thinks customers are struggling with something they’re trying to do.

Pricing

No free trial offered. View Brainfish’s current pricing.

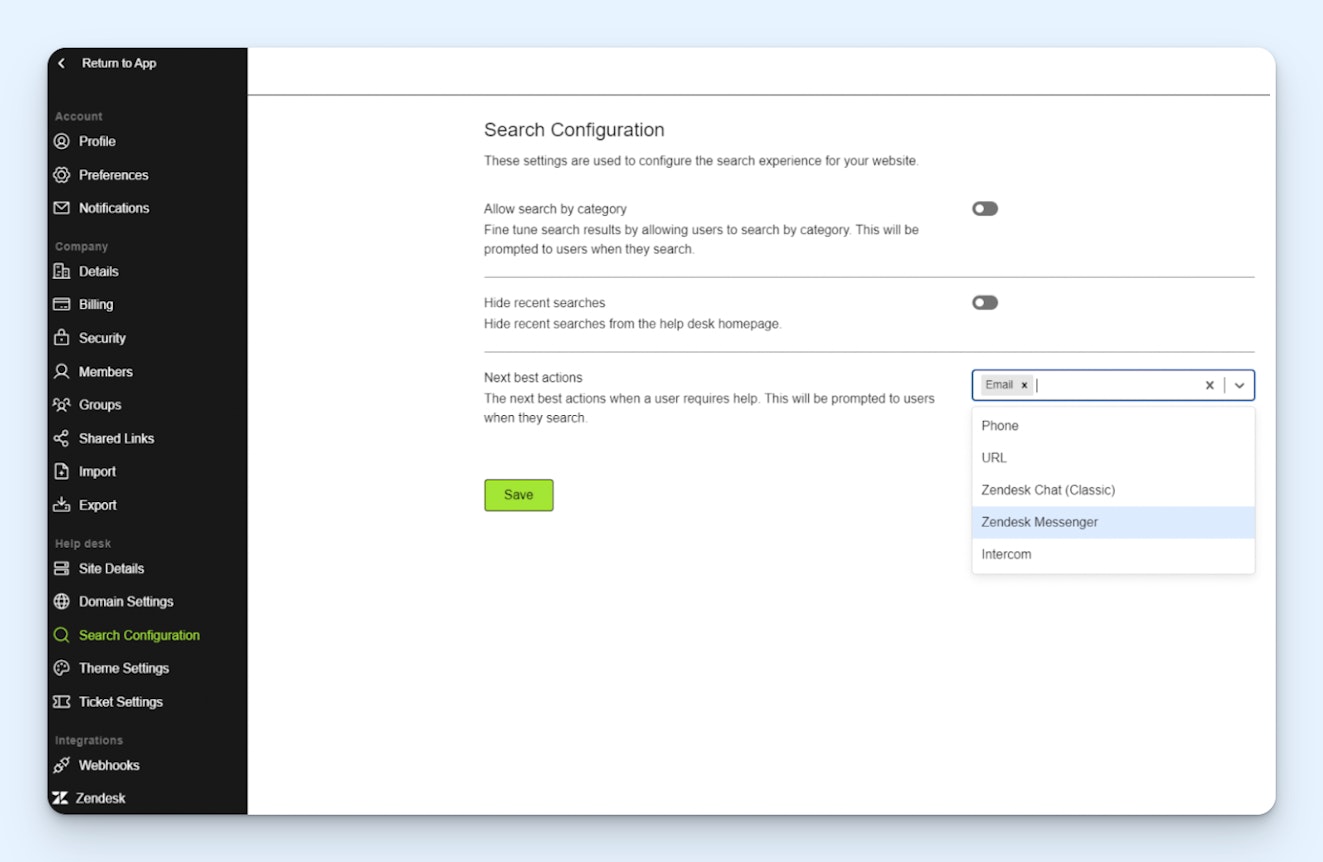

3. Zendesk – Best for guided knowledge base optimization

Zendesk is an enterprise-focused help desk with every feature customer service teams could ever possibly need. It gives you tools to deliver support across all channels, lets you create client portals and community forums, integrates with nearly 2,000 different platforms, and even lets you create custom apps and workflows using Zendesk Sunshine™.

As far as its AI features, Zendesk has AI agents that automatically reply to customers, copilots that recommend replies to agents, and AI coaching that alerts agents of issues in their replies. You can also use AI to optimize your work schedules automatically based on historical data. However, these features are only available as add-on products with additional costs.

Where Zendesk really stands out is with its tools to help you improve your knowledge base. Zendesk’s AI will automatically identify gaps in coverage in your help center. To fill those gaps, you can simply write a few bullet points specifying what should be covered, and its AI will transform it into an entire knowledge base article for you.

This makes it a more valuable option than Brainfish for teams that are nervous about letting AI automatically create and update their help center content. You still enjoy the benefits of content gap notifications and auto-generated drafts, but you’ll have more control over what ultimately gets published.

Pricing

Free trial available. View Zendesk’s current pricing.

4. SentiSum – Best for AI-powered insights and sentiment analysis

Manually tagging support tickets might work when you only receive a handful each week, but for teams handling hundreds or thousands of conversations, it’s a major waste of time.

That’s where SentiSum steps in, offering an AI-driven solution to automate insights and help you understand your customers better. It integrates with many help desk platforms and acts as an analytics add-on that automatically applies tags to your support tickets and extracts insights from customer feedback.

This data helps teams understand customer sentiment at scale, pinpoint the reasons customers are contacting support, and spot trends in recurring issues.

SentiSum can handle substantial amounts of data, but it can get costly. If you’re a mid-market or enterprise business looking to automate insights and gain a deeper understanding of customer feedback, it’s a solution worth exploring.

Pricing

No free trial offered. View SentiSum’s current pricing.

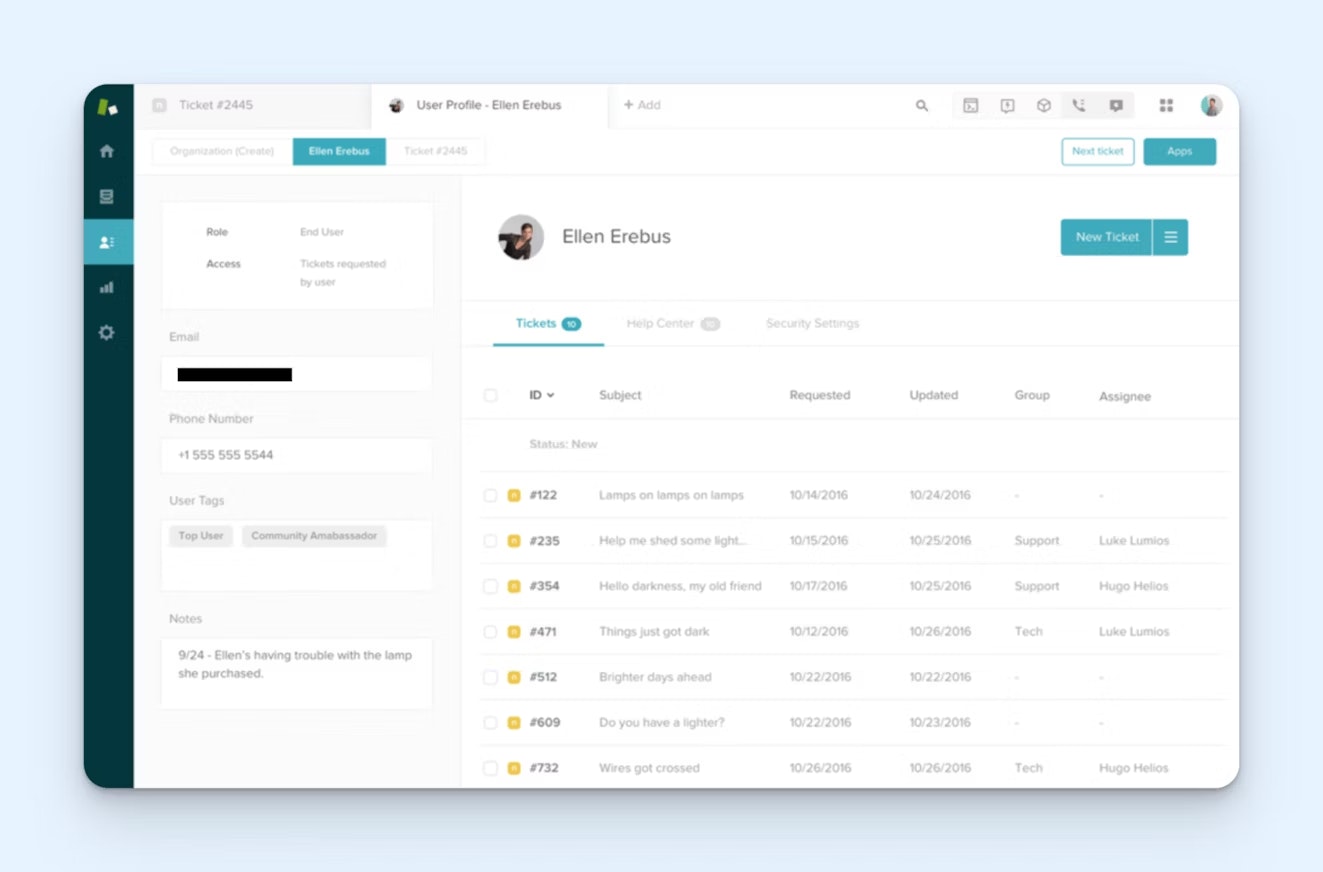

5. Intercom – Best for AI-powered performance analytics

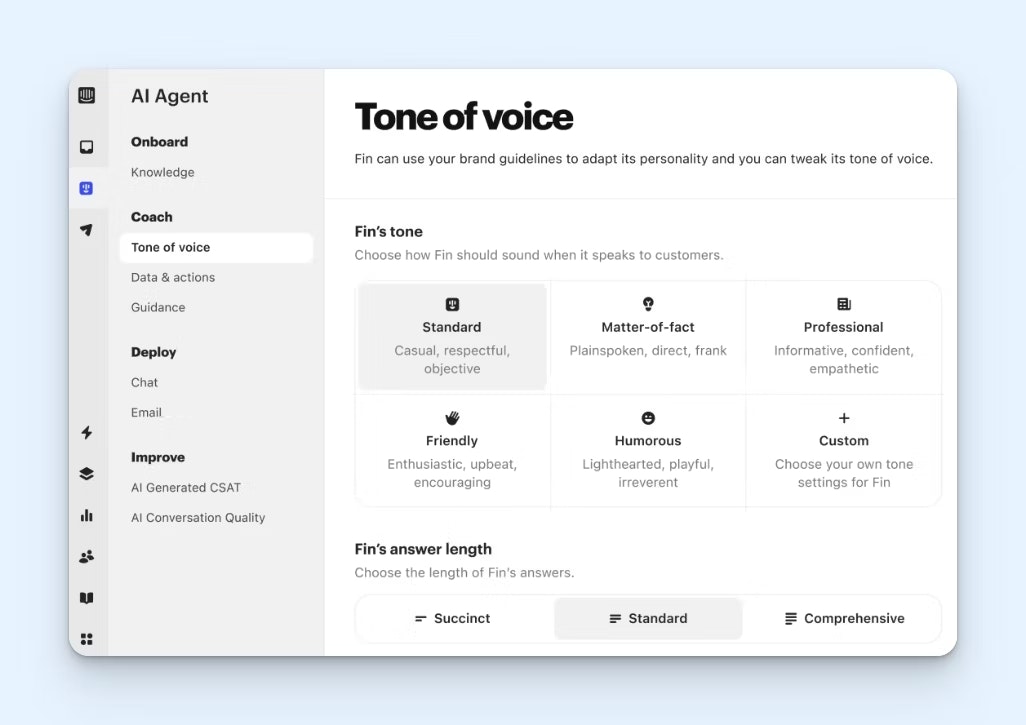

Intercom’s AI agent, Fin, does a solid job of tackling repetitive, straightforward questions via live chat. You can connect Fin to a wide variety of resources, including entire websites, files, quick fact snippets, previous customer interactions, and even custom answers to frequently asked questions. You can also fine-tune the bot’s personality to align with your brand voice.

Fin also comes with an interface for analyzing unanswered questions so you can easily identify content gaps and add new resources to improve coverage over time.

The interesting thing about Fin is that you can use it as a standalone chatbot regardless of what help desk you use — you don’t need to use Intercom’s help desk to take advantage of Fin.

However, Intercom’s help desk does offer a few more AI features you may be interested in. It can analyze sentiment to provide customer satisfaction scores on all conversations and alert you to replies sent by your human agents that the AI believes weren’t helpful, helping you identify training opportunities for your team.

Pricing

Free trial available. View Intercom’s current pricing.

6. Balto – Best AI customer service software for call centers

AI support tools aren’t limited to text-based channels; they can also improve your phone support. Integrating AI into phone support can greatly improve both agent performance and the customer experience.

As agents engage with customers, Balto listens in on the conversation and helps streamline the process by:

Displaying key talking points to guide the call and offering helpful phrases and questions when agents need them most.

Automatically generating answers to questions and providing scripts to agents.

Providing live chat for reps to instantly connect with managers for guidance during critical calls.

Phone support can be especially stressful for agents, as there’s little room to pause, research, or craft thoughtful responses. By providing real-time support, AI tools like Balto empower agents to feel more confident and in control.

On the supervisor side of things, Balto’s AI also helps you spot issues and training opportunities by automatically flagging compliance issues for you to review and scoring calls for quality assurance. It can also automatically redact sensitive information like PHI from call transcripts, notes, and summaries.

Pricing

No free trial offered. Contact Balto for pricing.

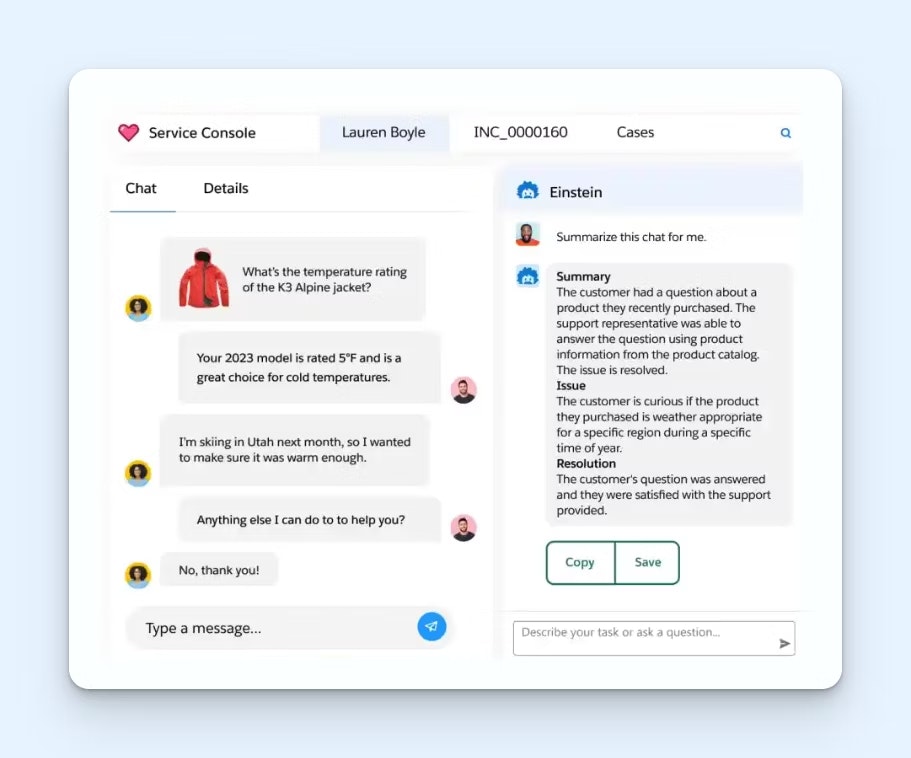

7. Salesforce Service Cloud – Best AI help desk for enterprise companies

Salesforce Service Cloud is built (and priced) for enterprises, but it has the most advanced AI capabilities of all of the apps on this list. Salesforce’s AI can:

Answer customer questions in your help center or via SMS or messaging channels.

Draft replies for agents or suggest relevant help center articles to reference.

Create step-by-step plans for agents explaining how to solve cases.

Route, categorize, and assign tickets based on skills, availability, and historical performance.

Summarize long conversation threads.

Translate support requests and replies into other languages.

Transform support team replies into knowledge base articles.

One standout feature is that in addition to being able to understand text that customers send in with their support requests, it can also process and understand pictures, videos, and audio files.

AI agent conversations in Salesforce Service Cloud are also highly secure due to Salesforce’s Einstein Trust Layer, which uses features like dynamic grounding, zero data retention, and toxicity detection to protect the privacy and security of your data.

Pricing

Free trial available. View Salesforce Service Cloud’s current pricing.

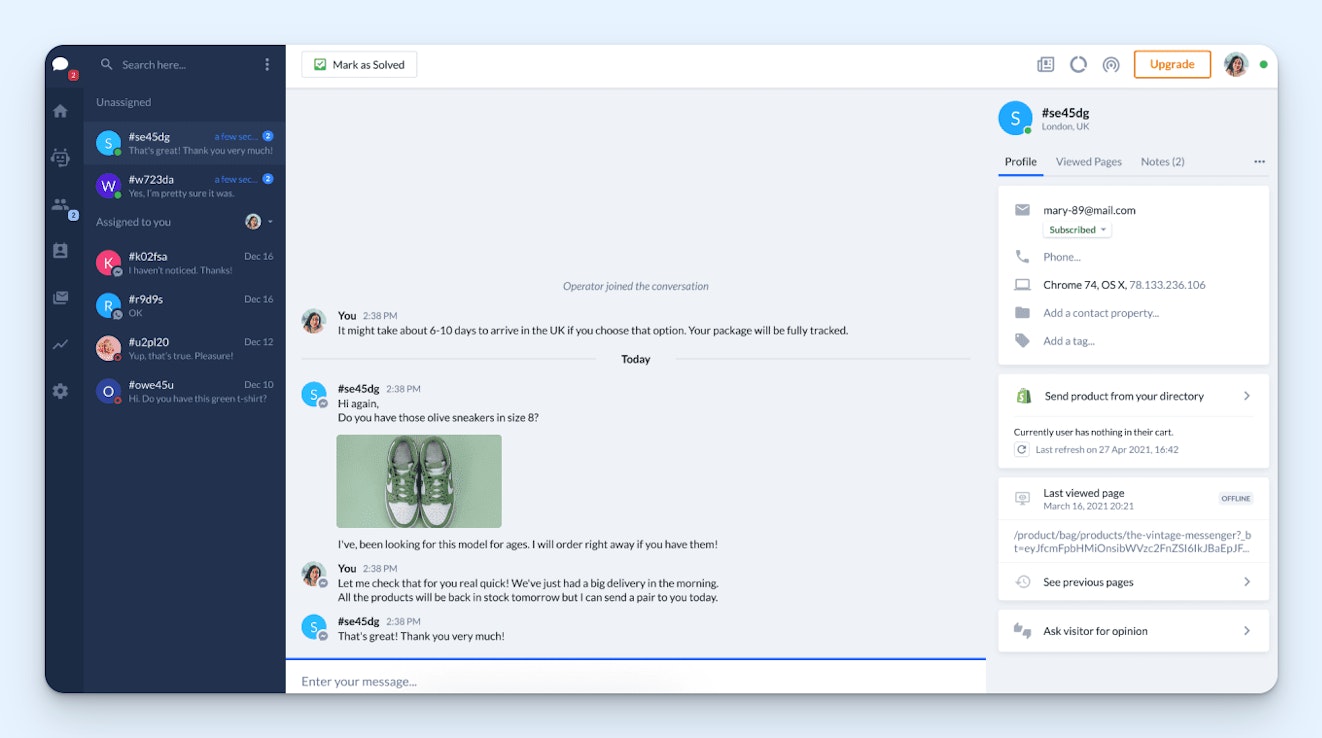

8. Tidio – Best for AI skeptics

If you’re still feeling skeptical about AI’s ability to deliver the same level of support that a human would and want to try it out in a low-risk way, Tidio is a good AI system to consider. It lets you monitor the conversations AI is having with customers in real time so you can keep an eye on what it’s doing and jump in if things start to go south.

It also learns from the responses you send when you jump in, making it less likely to make the same mistakes again in the future.

You can train Tidio’s chatbot, Lyro, on different knowledge sources like an FAQ page or your website, and it can automate all sorts of tasks such as checking order statuses, answering basic questions, and recommending products to customers based on your Shopify inventory. For more complex situations, you can use Tidio’s Flows feature to build chatbot flows manually.

Pricing

Free trial available. View Tidio’s current pricing.

9. Productboard – Best for managing customer feedback

Customer feedback is helpful and can give you a direct line to what will truly benefit your users. But for a small support team, managing a lot of feedback can feel overwhelming.

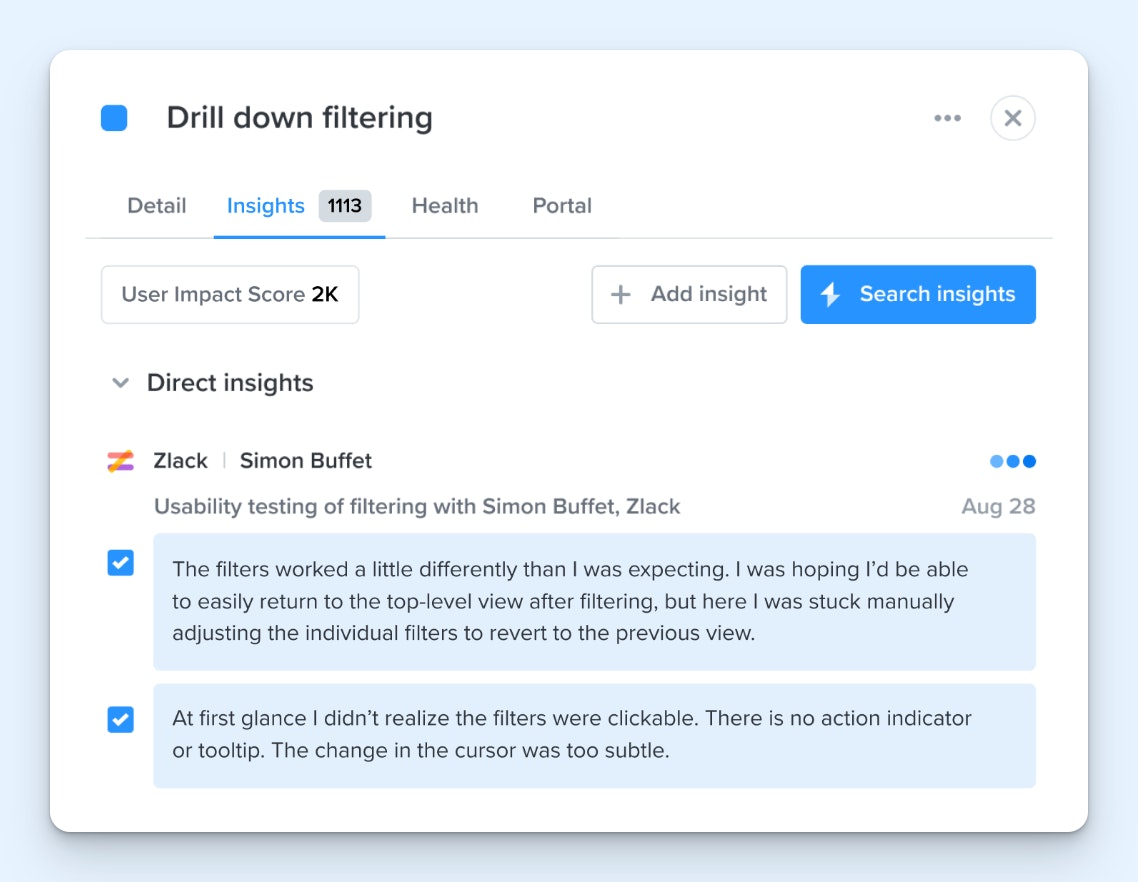

Productboard integrates with a variety of help desk, survey, and communication tools to centralize customer insights. These insights can then be linked directly to product areas, features in your roadmap, or items in your backlog to provide a clear view of what improvements or new features will have the biggest impact.

Productboard’s AI features make the process quick and efficient by identifying and summarizing customer pain points. You can quickly review concise summaries that capture the core of what customers are asking for (instead of reading every back-and-forth conversation). Feedback is then automatically connected to relevant features or projects in your Productboard.

Productboard also uses natural language processing to help you search through the feedback you received, making it easier to find the feedback you’re looking for even when it doesn’t use the words you specifically searched for. Finally, it also provides an AI writing tool that makes it easy to turn customer feedback into feature briefs.

Pricing

Free plan and trial available. View Productboard’s current pricing.

Choosing the right AI customer service software for your team

Choosing the right AI tool for your customer support team can feel overwhelming given the variety of options available. To make the right decision, start by evaluating three key factors.

Support conversation volume

If you’re managing a high-volume team handling thousands of requests each day, even small efficiency gains can have a significant impact. In this case, look for tools that help scale operations while maintaining quality:

Chatbots for deflecting common repetitive queries and reducing the number of tickets requiring agent intervention.

Automated QA tools to help maintain quality while saving on costs. With a high volume of tickets, manual QA reviews can become costly and time-consuming.

AI-powered reporting software that can analyze large volumes of data, uncover patterns, and generate valuable insights for data-driven decisions.

On the flip side, if you’re working with lower ticket volume, investing in AI for customer service volume automation may not yield as much value.

For smaller teams, automated tagging or AI-powered QA reviews may not be necessary as the volume may not justify the investment. Instead, look at solutions that help your small team increase efficiency as you scale:

AI help desks streamline ticket management and customer queries, giving agents more time to focus on complex issues.

AI knowledge base solutions can help your agents quickly access and create documentation, making the support process more efficient.

Ticket complexity

The complexity of the support requests your team handles should also influence your choice of AI tools.

If your team deals with intricate, case-specific issues (e.g., debugging or custom engineering solutions), conversational AI chatbots may not be the right fit — they still struggle with tickets that require a deep understanding of logs, customer use cases, or creative problem-solving. Instead, look for AI tools that enhance the capabilities of your agents:

Internal workflow automation tools to streamline processes like ticket routing.

AI assistants for tone and grammar corrections.

AI-powered documentation software to generate detailed guides and articles based on support tickets or bullet points.

If your team handles simpler queries — like many ecommerce brands, for instance — then AI tools designed to empower self-service will be of great help. This includes:

Chatbots for automating responses to common questions.

AI knowledge bases that can return results based on search intent rather than just keywords, allowing customers to resolve common queries on their own.

Key customer experience objectives

Finally, what are you trying to achieve with AI? Your goals will help determine which tools are the best fit for your team:

If your goal is to keep up with the high volume of tickets and reduce the load on your support team, AI-powered knowledge bases, chatbots, and self-service solutions can help deflect tickets by enabling customers to find solutions on their own.

If you’re focused on providing a personalized, high-touch customer experience, look for tools that empower your agents. Automated internal workflows, conversation summaries, and AI-driven assistance can free up your agents’ time so they can focus on providing exceptional support.

While all the tools listed above may seem tempting, your current situation and top goals will guide where to start implementing AI in your support team.

Tips for implementing AI customer service software

Choosing the AI support software you’ll use is only half the battle. Once you have the system in place, follow these tips for a successful implementation.

Talk to your team

A good portion of the news about AI is how it’s going to take everyone’s jobs, so it’s natural if your team feels a little anxious about you implementing it into your support process. Taking time to talk to them about your goals for AI before you start implementing can ease their anxiety and get them more excited about helping you get it tested and launched.

We have several posts you can read and share with your team on this topic:

Clean up your training data

Some AI-powered ticketing systems let you choose the specific assets the AI is trained on. Others will simply crawl your knowledge base, historical ticket data, and website to build knowledge.

If your system is in the latter group, it’s important to take time upfront to make sure that the information in these systems is accurate and up to date. Otherwise, you’ll end up with an AI that’s poorly trained on inaccurate data.

Start small

While it might be tempting to immediately take advantage of all of the new AI features you have available to you, doing so will make the process more time-consuming and error-prone.

Instead, choose one area you want to focus on — maybe one related to a specific challenge your team is having — and focus on launching and refining the AI for that specific use case.

For example, let’s say you want to improve your time to resolution. A good place to start might be to have AI write draft replies to service requests for your agents. This will help you get replies back to customers faster while also allowing you to monitor the accuracy of the AI’s responses.

Once you get to the point where you feel like the AI is writing drafts that are good enough to send without editing, you can think about launching it as an AI agent to answer customers’ questions on its own.

Test thoroughly

If the feature you want to take advantage of first is having AI reply directly to customers, make sure you do rigorous testing before launching it to make sure that it interprets customers’ questions correctly and provides accurate answers. Otherwise, you run the risk of negatively impacting the customer experience.

If you’re using it for ticket routing, send several test queries to make sure requests end up in the right place.

You’ll also need to work with your security team to do some testing to make sure that the AI never shares protected data. If you have it set up where it can make changes in your system for you (such as updating orders or changing shipping addresses), you’ll want to make sure it has a system for validating that someone who’s writing in is who they claim to be.

Give it time to learn

AI tools need a calibration period to deliver optimal results. Don’t expect perfection out of the gate. In most cases, it takes at least a few weeks for AI agents to fine-tune their performances, from generating draft responses to aligning with your desired QA standards.

During this time, actively monitor, train, provide feedback, and adjust settings based on what’s working and what’s not.

Take the right approach to using AI in customer support

Implementing AI customer support software doesn’t mean replacing your human agents with AI — it means using AI to enhance and support your team.

As you explore AI solutions, focus on ways to streamline internal workflows and reduce the burden of repetitive tasks. Human connection still remains at the heart of a great customer experience, and AI can free up your team to focus on making those connections happen.